Abundance begets abundance. This is also true in science. Every year, there are more than 5 million articles published in scientific journals. That’s more than 14k articles per day! How are we ever going to make sense of so much information?

Our method of choice has long been the literature review. It gives an overview of literature regarding the questions we want an answer to. The systematic review, the ‘highest level of synthesizing scientific evidence’, is all about retrieving relevant literature systematically and then screening that literature systematically against our inclusion criteria. Systematically, in this case, means we use the same decision-making processes and criteria for each of the articles, and these criteria are predefined.

What’s the problem?

The problem arises when we retrieve a huge amount of literature that needs to be screened systematically. If the topic of interest is rather popular in scientific circles, this problem will be inevitable. However, we still need to synthesize the knowledge we have gained from (recent) research in a field. This is what happened to me recently. Doing a systematic review in the field of health behavior change, I retrieved over 10.000 articles that needed to be screened, first in titles and abstracts, and then – in full text.

This is partly the reason why conducting systematic reviews, from the moment of conceptualization to its publication, can often take well over a year. Thus, by the time the evidence that we have found is synthesized, a big amount of new information is available, warranting its own systematic review. And the circle goes ever on.

Solving this challenge in the age of AI?

The recent advances in AI (hey, ChatGPT!) made us wonder: can we optimize the process of conducting a systematic review by employing the help of artificial intelligence? We searched the internet for such tools, and sure enough, there were many. We started with an initial list of 50 potential AI tools, which were examined in detail regarding their features. We have thereon narrowed our list down to AI tools that were specifically made for optimizing the process of conducting systematic literature reviews. We investigated the information available about these tools and evaluated them based on several objective measures for ease of use, functionality and ethics. Read more about how we evaluated the tools down below. Although we didn’t personally test each tool, this approach ensured that our evaluation was both systematic and unbiased.

Heads-up: AI ahead

Although these AI tools are still in an early stage of development, they can be quite impressive. Moving on, it is only left to say that no matter how convincing, AI has no real sense of understanding the meaning of scientific concepts, but only the patterns underlying its literature. That is why in all these tools, the researcher has final decision-making power and needs to verify the AI’s choices. AI tools can assist immensely in reducing the resource-intensiveness of systematic reviews, but they are just assistants. High quality synthesis and evidence is only possible with the expertise of the human scientist at the receiving end.

It is hard to keep up with the advances in the AI field, also when it comes to tools that can be used in research. The objective of this blog is not only to present a quick and clean overview of tools available at the time of writing, but to provide the building blocks of a living platform of all the new and old tools, and their respective features available. Such a systematic and objective platform is well needed going forward with research in the present technological climate.

Read on to find out the top 3 best AI tools for conducting systematic reviews. Or go here to find our tool that helps you choose the tool best suited for your needs.

Our top pick AI tools for each stage of the systematic literature review

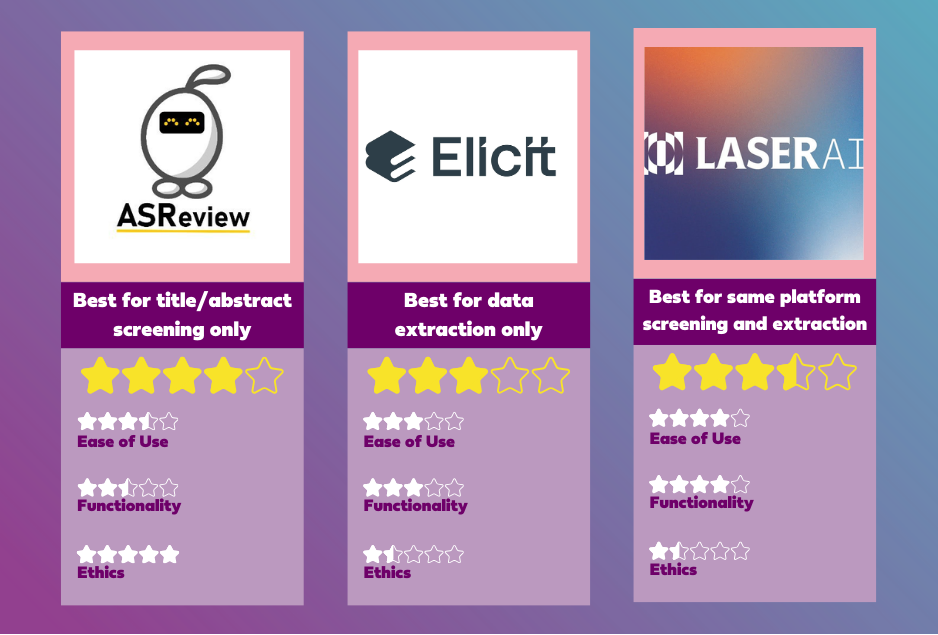

- Best for title/abstract screening: ASReview ★★★★☆

- Best for data extraction: Elicit ★★★☆☆

- Best combined platform: LaserAI ★★★✮☆

Choose the best tool for your research

As researchers, our needs can vary. The choice of the best tool is a combination of the features and functionalities the tool offers and the demands of our project. We understand that what is ‘objectively best’ does not have to be the best choice. That is why we have worked on a tool that provides all the important information, including stages, features, AI models and pricing, as well as the rating on ease of use, functionality and ethical AI. Using the filter option, you can choose the stages, features and models you prefer. You will then see only the tools that match your specification. You can also choose what you find most important in the tool: its ease of use, functionality or ethical AI. The overall ratings will change based on the weight of your criteria of importance. You can then choose the best tool best suited to your preferences.

To use the tool, go here.

How we evaluated the tools

There are already many AI tools available for optimizing and streamlining the systematic review process. Many of them are somewhat similar in their functionality. We needed tools that could optimize systematic review specific tasks for a range of articles and could save time. That means we have not considered other AI tools, that can be used to perform small parts or tasks of systematic reviews, such as ChatGPT or Perplexity. Our final list includes 17 tools, which were evaluated by two of our researchers based on a pre-defined evaluation criteria that included assessment of usability, functionality and ethical AI. In this table you can see the criteria that we used and how each tool performed on these criteria.

Check back soon! We will share our hands-on experience with the tools we picked. Follow us on social media and don’t miss out on what’s coming next!

This research was published on October 4, 2024.